Si desarrollan en Ruby pueden contenerizar su código bastante fácil, en este ejemplo utilizo Sinatra como framework pero pueden cambiarlo por el framework de su preferencia. Código en Github al final

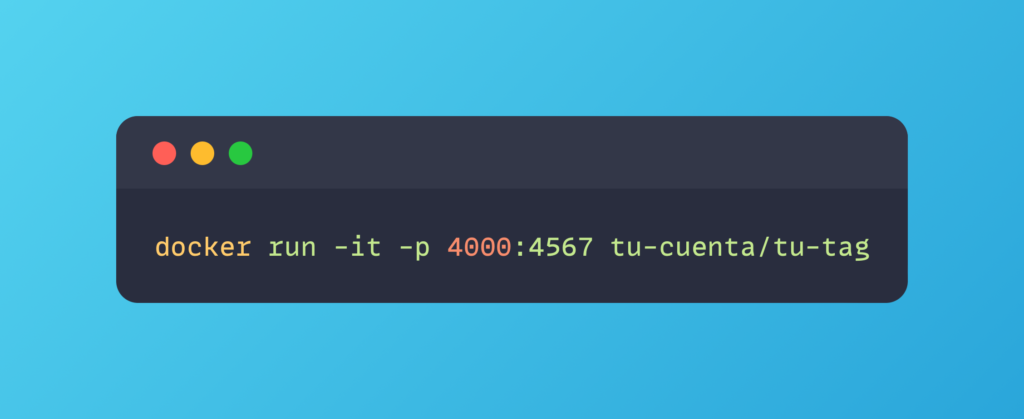

Ojo que el puerto 4567 es default de Postgres, si tienes un postgres corriendo localmente vas a necesitar apagarlo o cambiar el puerto de al app

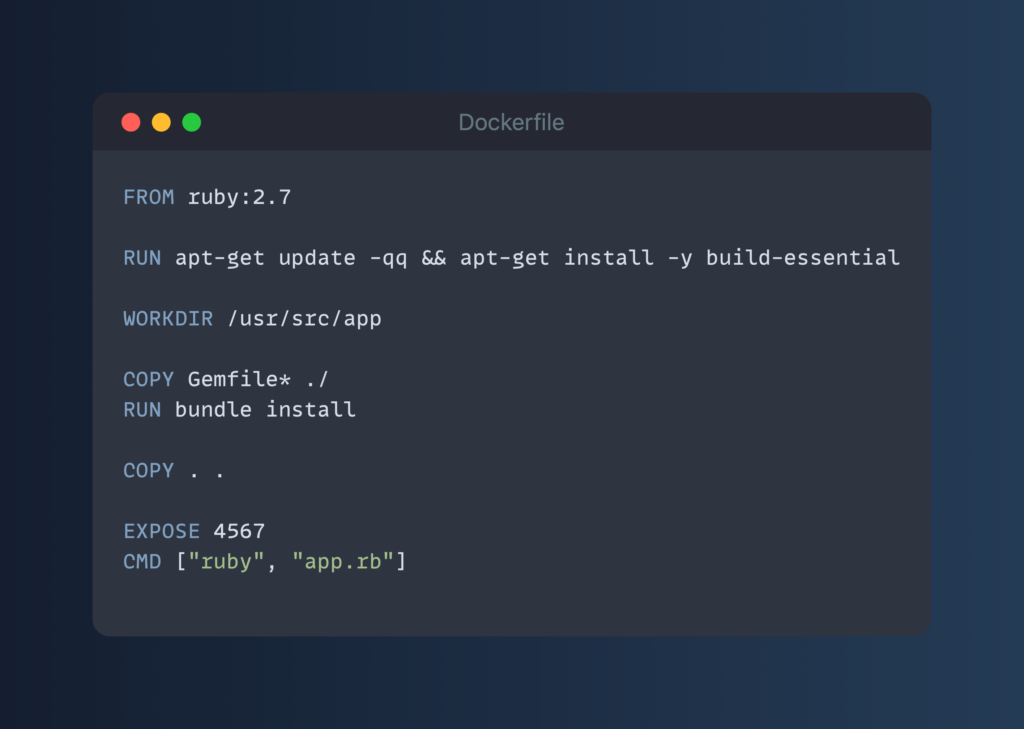

Para crear una image de docker para esta aplicación tiene que crear un `Dockerfile` que arranque con la imagen de `ruby:2.7`, asi ya no tienen que instalar nada ustedes, si necesitan otra version de Ruby, digamos la 2.6, solo cambian esta linea a `ruby:2.6` y listo, PERO RECUERDEN QUE RUBY 2.6 TUVO END OF LINE EN DICIEMBRE DEL 2018 YA NO LO USEN POR AMOR A DIOS.

Primero vamos a copiar el `Gemfile` e instalarlo con `bundle install` dentro del contenedor para tener todas nuestra dependencias listas

Segundo vamos a copiar todo el codigo a un folder `/usr/src/app`

Finalmente declaramos que puerto expone este contenedor (4567) y el comando para arrancar el servidor, un simple `ruby app.rb`

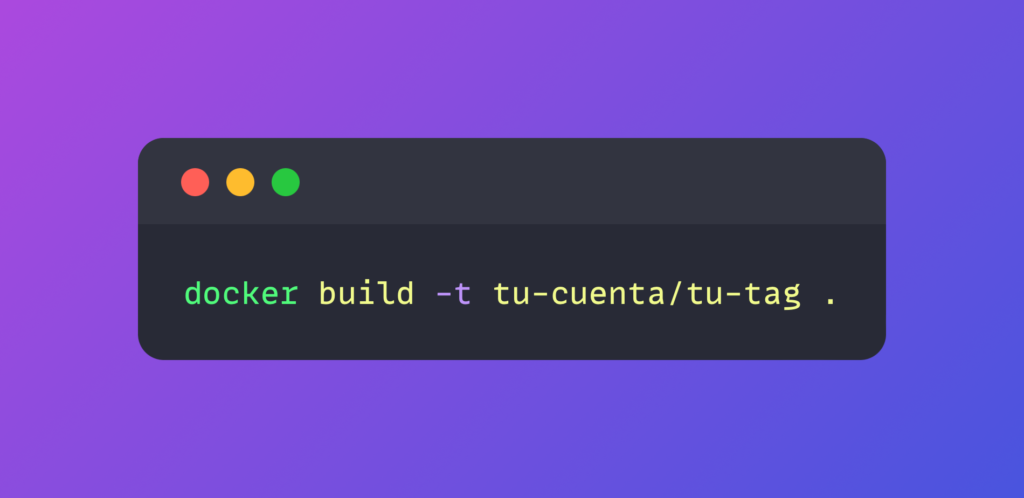

Para construir esta image de docker, solo tienen que correr el siguiente comando en el mismo folder donde esta su proyecto y su Dockerfile

Ya con esto pueden probar su image de docker localmente, aqui la vamos a correr y re-mapear el puerto de la aplicación `4567` a otro puerto en mi host que esta disponible `4000`. Visiten http://localhost:4000 y vean el resultado

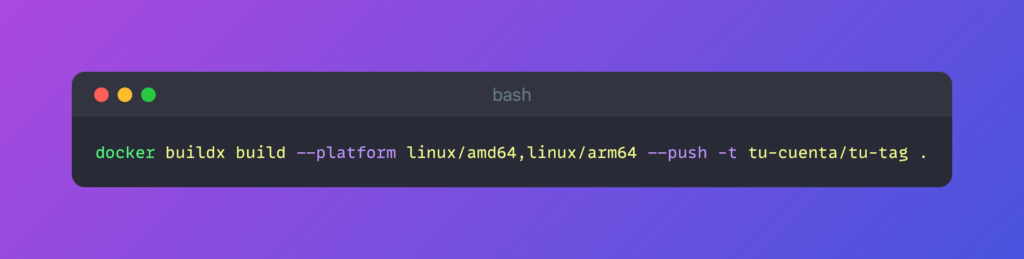

Si están trabajando en una Mac con Apple Silicon, pueden crear imágenes de docker multi-arquitectura para que las computadoras con procesadores x86 también puedan correr la imagen

Ahora cuando quieran deployear en producción puede usar esta imagen, ya no mas copiar archivos por FTP o en ZIP. Eventualmente queremos deployear esto en #Kubernetes

Todo el codigo esta en https://github.com/perrohunter/docker-ruby